RESTifAI - A Prototype for Smarter API Testing

Introduction: When AI Starts Testing Itself

Artificial Intelligence is increasingly shaping how software is developed, maintained, and tested. At CASABLANCA hotelsoftware, we see this evolution as an opportunity to strengthen the foundation of our own systems and contribute to broader research efforts that improve software reliability. That’s why our CASABLANCA Research Team joined the European Research Project GENIUS at the start of 2025. GENIUS connects 31 partners across eight countries to investigate how Generative AI can support all stages of the software development lifecycle - from requirements and code generation to automated testing and documentation. Our first milestone within this collaboration is RESTifAI, presented at the Demonstration Track of ICSE 2026. RESTifAI represents an initial prototype in the domain of AI-supported API testing - a foundation we will build upon in the coming years.

Why API Testing Matters - Especially for CASABLANCA

Modern hospitality software operates through a network of connected services. Every booking, guest review, and inventory update depends on stable and consistent communication between APIs. At CASABLANCA, we follow an API-first microservice architecture, treating services as independent components that interact through defined interfaces. This modular design improves scalability and flexibility, but it also demands reliable testing to ensure that updates and integrations do not introduce unintended behavior. Automated API testing helps maintain this reliability - enabling stable operation for hotels, resorts, and hospitality businesses that depend on our software for their daily work.

RESTifAI: Teaching Large Language Models to Test Smarter

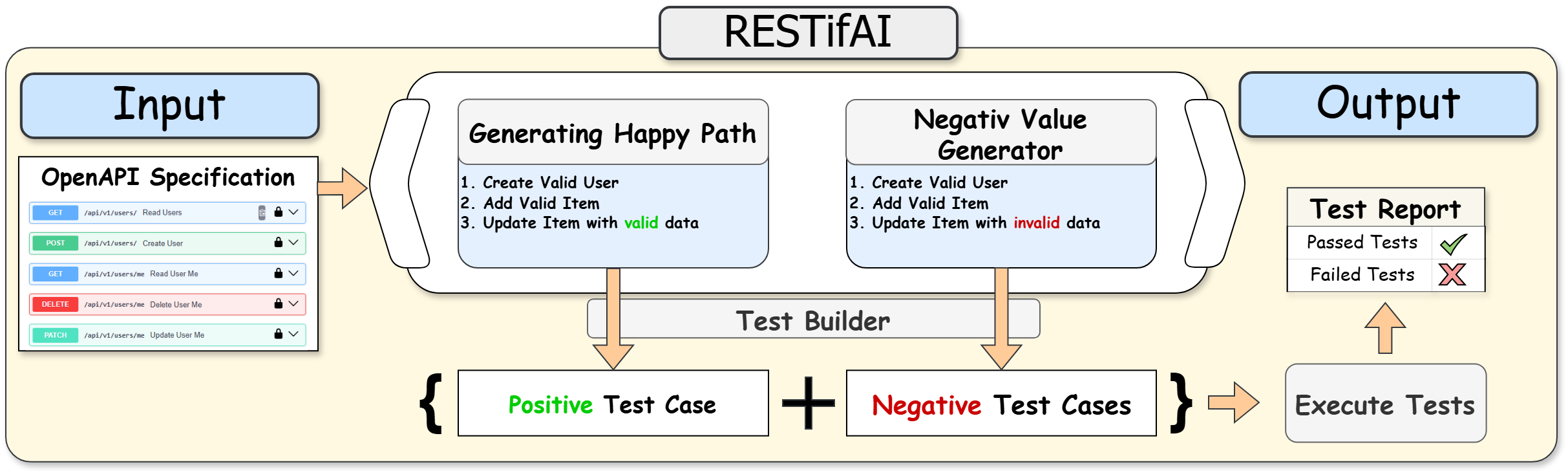

Our paper, RESTifAI: LLM-Based Workflow for Reusable REST API Testing, introduces a prototype workflow where Large Language Models (LLMs) assist in generating and executing reusable test suites for REST APIs. While existing testing tools often rely on fuzzing or mutation-based strategies, RESTifAI explores how AI can reason about an API’s OpenAPI Specification (OAS) and construct meaningful test scenarios that reflect both valid and invalid usage. RESTifAI is structured as a workflow consisting of five stages:

1. Input: Understanding the API’s Blueprint

The process begins with the OpenAPI Specification, which defines endpoints, parameters, and data structures. RESTifAI interprets relationships between operations - for example, identifying that a “Create User” call must precede an “Update User” operation. This step ensures that tests follow logical, realistic sequences.

2. Generating the Happy Path - Valid Scenarios

The Happy Path Generator uses an LLM to create valid operation chains that represent typical, successful API interactions.

It produces realistic parameter values that should lead to 2xx success responses, confirming that the API behaves correctly under standard conditions.

Example sequence:

- Create a valid user

- Add a valid item for that user

- Update the item with valid data

These positive cases verify that the documented API functions operate as intended.

3. Generating Negative Values - Challenge Scenarios

After establishing valid cases, RESTifAI generates negative test cases that intentionally violate constraints - for instance, by reversing date ranges or exceeding value limits.

These tests confirm that the API correctly rejects invalid inputs with 4xx client error responses, helping identify where error handling could be improved.

4. Test Builder - Structuring Executable Suites

The Test Builder combines both positive and negative cases into structured test suites in a Postman Collection format that can be executed using the Newman CLI tool.

This structure enhances reliability and integration into automated testing pipelines.

5. Execution and Output - Reporting the Results

Once tests are generated, RESTifAI executes them and produces a Test Report summarizing passed and failed cases.

Passed tests indicate correct behavior; failed ones highlight potential bugs in the system under test or mismatches between the specification and actual implementation.

This output provides an initial, data-driven view of API reliability.

6. Real Impact in Practice

We validated RESTifAI not only on benchmark APIs but also on CASABLANCA’s own microservices. In our Guest Review Service and Inventory Service, RESTifAI identified both structural and logical bugs - including cases where the system allowed invalid date ranges or incorrect data types.

These results confirmed that AI-assisted test generation can complement existing testing practices and provide new insights into service reliability.

Collaborating Beyond Borders

The development and validation of RESTifAI were made possible through close collaboration with University of Innsbruck (Benedikt Dornauer) and Mälardalen University (Sweden).

Together, we benchmarked RESTifAI against existing LLM-based testing tools and explored its application in real-world scenarios within CASABLANCA’s production environment.

This collaboration supported us throughout the entire process - providing valuable feedback during development, critical review of our methods, and shared insights that helped refine both the prototype and its evaluation. The academic perspective enriched our work, ensuring methodological rigor and helping align our research with current advances in AI-assisted testing.

This partnership continues to guide our progress, bridging academia and industry to advance the future of automated API validation.

Open Source - Contributing to Research

RESTifAI is publicly available - both the code and paper documentation - for researchers and developers interested in exploring this field further.

🔗 GitHub: Repository

🔗 ArXiv: Paper

Sharing these resources allows others to build upon our prototype, contribute improvements, and collectively advance automated testing research within the GENIUS community and beyond.

The Road Ahead - GENIUS and Beyond

RESTifAI marks the starting point of our involvement in the GENIUS Project. Looking ahead, we plan to build upon this work and continue exploring the field of automated API testing. Our research will focus on advancing techniques that enhance test accuracy, efficiency, and adaptability, deepening our understanding of how AI can contribute to more reliable and maintainable software systems.

At CASABLANCA hotelsoftware, our goal is not only to innovate but to apply research in ways that strengthen reliability and improve the user experience of our hospitality solutions

Closing Thoughts

RESTifAI represents our first step into AI-assisted API testing, a prototype that opens new directions for research and development.

It’s more than a technical milestone; it’s a reflection of CASABLANCA’s mission to combine hospitality and technology in a way that feels effortless, intelligent, and reliable.

We’re excited to continue our journey within the GENIUS project and share updates as our research evolves.

👉Explore the GENIUS Project: GENIUS ITEA4

👉 Watch the RESTifAI demo: YouTube Demo